Getting your content noticed by search engines can feel like shouting into the void. You create amazing pages, but they sit invisible in search results for weeks or months while your competition gets all the traffic. This happens because search engines haven’t indexed your URLs yet.

Indexing in SEO rapid URL indexer tools solve this frustrating problem by fast-tracking your pages into search engine databases. This guide is for website owners, digital marketers, and SEO professionals who need their content found quickly and want to stop waiting around for organic discovery.

We’ll break down how search engine crawling actually works and why some pages get indexed fast while others don’t. You’ll also discover practical rapid URL indexer strategies that can get your pages ranking within days instead of months.

Finally, we’ll cover advanced techniques to keep your indexing performance strong over time. Stop losing potential traffic to slow indexing. Let’s get your content the visibility it deserves.

What IS URL Indexing and Its Critical Role in SEO Success?

What URL indexing means for search engine visibility?

URL indexing is the process where search engines like Google discover, crawl, analyze, and store your web pages in their massive databases. Think of it as getting your website’s pages added to a giant library catalog that billions of users can search through.

When your URLs are properly indexed, they become eligible to appear in search results whenever someone types in relevant queries. The visibility your website gets in search results depends heavily on SEO URL indexing.

Pages that aren’t indexed simply don’t exist in the eyes of search engines, making them invisible to potential visitors. This makes indexing the foundation of any successful SEO strategy – you can have the most amazing content and perfect optimization, but without proper indexing, your efforts won’t drive organic traffic.

How Search Engines Discover And Process Your website Content?

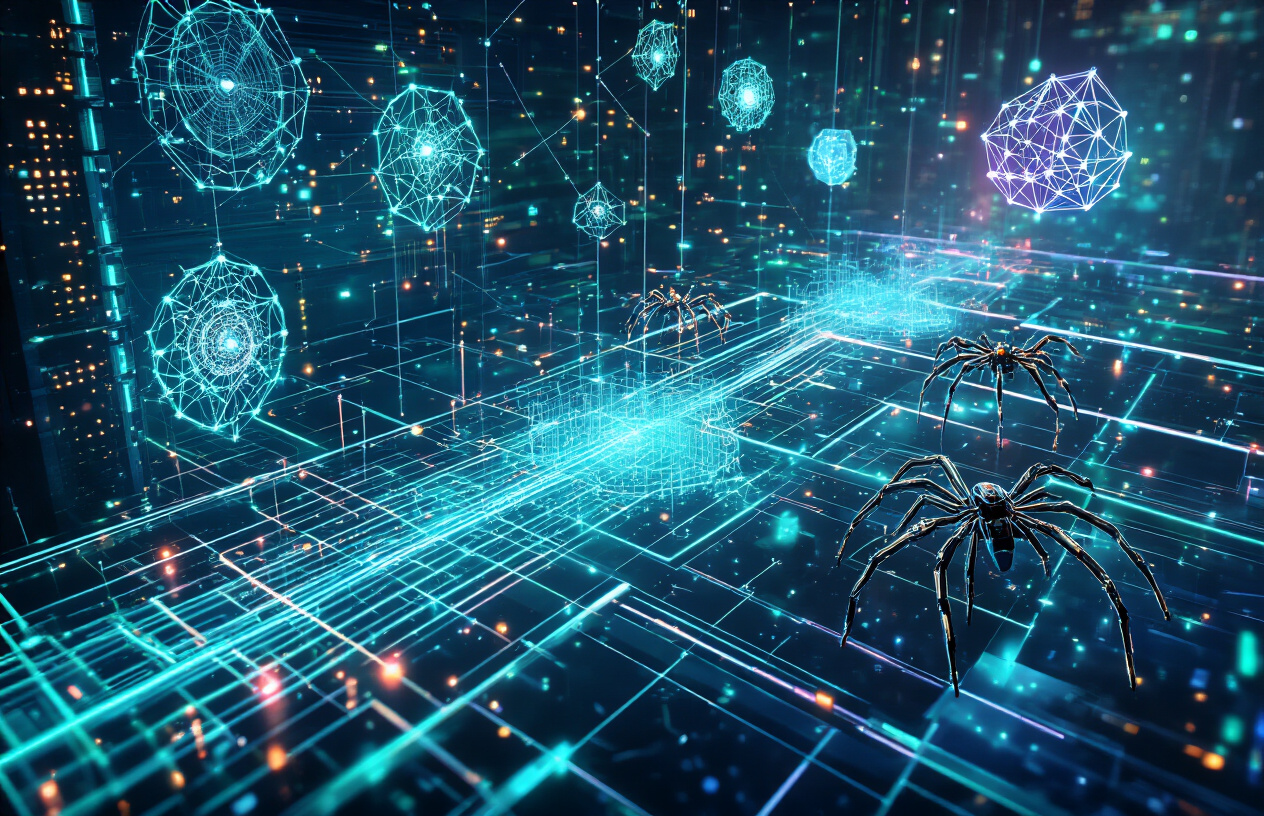

Search engines use automated programs called crawlers or bots to discover and process web content. These bots follow links from page to page, creating a web of connections across the internet. When they find a new URL, they analyze the content, structure, and relevance before deciding whether to add it to their index.

The discovery process happens through several channels:

- Internal linking: Bots follow links within your website to find new pages

- External backlinks: Links from other websites guide crawlers to your content

- XML sitemaps: Direct submission of your site structure to search engines

- Direct URL submission: Manual submission through search console tools

Once discovered, search engines evaluate factors like content quality, page speed, mobile-friendliness, and user experience signals. Pages that meet their quality standards get added to the index, while others may be rejected or delayed.

The Connection Between Indexing Speed And Organic Traffic Growth

The speed at which your pages get indexed directly impacts how quickly you can start receiving organic traffic. Faster indexing means your content becomes searchable sooner, giving you a competitive advantage in capturing search demand.

Fast Google indexing becomes especially critical for:

- Time-sensitive content like news articles or trending topics

- New product launches or promotional campaigns

- Fresh blog posts competing for seasonal keywords

- E-commerce sites adding new inventory

Research shows that websites with rapid indexing capabilities often see traffic growth within days rather than weeks or months. This speed advantage compounds over time, as early-indexed pages have more opportunities to build authority and climb search rankings.

Common Indexing Challenges That Slow Down website Visibility

Several technical and strategic issues can create bottlenecks in the indexing process. Poor website architecture tops the list – sites with broken internal linking, confusing navigation, or orphaned pages make it difficult for crawlers to discover all your content.

Technical problems that frequently delay indexing include:

| Challenge | Impact on Indexing |

|---|---|

| Slow page load speeds | Crawlers may timeout or skip pages |

| Duplicate content | Search engines may ignore similar pages |

| Missing XML sitemaps | Reduced discoverability of new content |

| Robots.txt errors | Accidentally blocking important pages |

| Poor mobile optimization | Lower priority in mobile-first indexing |

Content quality issues also play a major role. Pages with thin content, excessive ads, or poor user experience signals often get deprioritized in the indexing queue. Search engines want to index valuable content that serves user needs, so low-quality pages may wait longer or never make it into the index at all.

Website owners frequently underestimate how these challenges compound. A site might have amazing content, but if technical barriers prevent efficient crawling, that content remains invisible to potential customers searching for exactly what the business offers.

The Science Behind Search Engine Crawling and Indexing Processes

How Search Engine Bots Prioritize Which Pages to Crawl First?

Search engine bots operate like digital detectives with a massive to-do list, making split-second decisions about which pages deserve their attention first. The crawling process isn’t random – it follows sophisticated algorithms that evaluate multiple signals to determine priority.

Crawl budget allocation plays a crucial role in this process. Google assigns each website a specific crawl budget based on the site’s authority, server capacity, and content quality. High-authority sites with fresh, valuable content receive larger crawl budgets, meaning their pages get discovered and indexed faster.

Internal linking structure significantly influences bot prioritization. Pages linked from the homepage or other high-authority pages get crawled more frequently. XML sitemaps act as roadmaps, guiding bots toward important pages and signaling their priority level.

Content freshness signals also drive crawling decisions. Pages that update regularly, publish new content, or generate user engagement signal to bots that they’re worth frequent visits. This creates opportunities for rapid URL indexer tools to capitalize on these natural crawling patterns.

| Priority Factor | Impact Level | Description |

|---|---|---|

| Homepage Links | High | Direct links from homepage get immediate attention |

| Recent Updates | High | Fresh content triggers more frequent crawls |

| User Engagement | Medium | Pages with traffic and interactions rank higher |

| Deep Page Links | Low | Pages buried deep in site structure wait longer |

Factors That Determine Indexing Frequency and Speed

The speed at which search engines index new content depends on several interconnected factors that webmasters can influence through strategic SEO practices. Understanding these elements helps optimize for faster search engine indexing.

Server response time directly impacts how quickly bots can process your content. Slow-loading pages create bottlenecks that delay the entire indexing process. Sites with response times under 200 milliseconds typically see faster indexing compared to slower alternatives.

Content quality and uniqueness serve as major ranking signals for indexing priority. Search engines prioritize original, valuable content over duplicate or thin pages. Pages that provide comprehensive answers to user queries often get indexed within hours rather than days.

Technical SEO factors create the foundation for efficient crawling. Proper robots.txt configuration, clean URL structures, and optimized meta tags help bots understand and process content more effectively. Sites with technical issues experience delayed indexing regardless of content quality.

Publishing frequency establishes crawling patterns. Websites that publish content regularly train search engine bots to visit more frequently, creating opportunities for immediate indexing of new pages. This predictable pattern helps rapid URL indexer strategies work more effectively.

External signals like social media mentions, backlinks, and traffic spikes can trigger additional crawling sessions. When other websites link to your content or users share it widely, search engines interpret these signals as indicators of content value and prioritize indexing accordingly.

The Role of Website Authority in Faster Content Discovery

Website authority acts as a multiplier for all indexing efforts, dramatically accelerating how quickly search engines discover and process new content. High-authority sites enjoy preferential treatment that can mean the difference between same-day indexing and weeks of waiting.

Domain authority metrics influence crawling frequency and depth. Established websites with strong backlink profiles and consistent traffic patterns receive larger crawl budgets and more frequent bot visits. This authority translates directly into faster content discovery for SEO URL indexing efforts.

Trust signals accumulated over time create shortcuts in the indexing process. Sites with histories of publishing quality content, maintaining technical excellence, and providing positive user experiences build trust with search engines. This trust manifests as reduced review periods and faster approval for new content.

Topical authority within specific niches can accelerate indexing for related content. Websites recognized as authoritative sources in their fields often see new content indexed immediately, as search engines assume the content will be valuable to users searching for related topics.

The compound effect of authority means that SEO indexing strategies work more effectively on established sites. However, newer websites can still achieve fast Google indexing by focusing on content quality, technical optimization, and building authority through consistent publishing and relationship building within their industry.

Authority also influences how search engines handle URL submission for SEO purposes, with trusted sites receiving priority processing for submitted URLs through various indexing channels.

Rapid URL Indexer Tools and Their Game-Changing Benefits

How Automated Indexing Tools Accelerate The Discovery Process?

Automated rapid URL indexer tools work by establishing direct communication channels with search engines, bypassing the traditional waiting game that webmasters face.

These sophisticated systems submit your URLs through multiple pathways simultaneously – including XML sitemaps, direct API calls, and strategic internal linking networks. The technology behind these tools leverages search engine indexing protocols to ping crawlers immediately when new content goes live.

The acceleration happens because these tools understand exactly how search engines prioritize crawl requests. They format submissions in ways that grab crawler attention faster than organic discovery methods.

Instead of waiting weeks or months for natural indexing, your pages can appear in search results within hours or days. Most premium indexing tools also maintain relationships with high-authority domains that regularly get crawled.

By strategically placing your URLs within these networks, they create multiple discovery paths that search engines can follow back to your content.

Key Features That Separate Premium Indexing Services From Basic Options

Premium SEO indexing tools offer features that basic services simply can’t match. Advanced tools provide real-time indexing status updates, showing you exactly when search engines acknowledge your URLs. They also offer bulk submission capabilities, allowing you to index hundreds of pages simultaneously instead of submitting one at a time.

| Feature | Basic Tools | Premium Tools |

|---|---|---|

| Submission Volume | 10-50 URLs/day | 500+ URLs/day |

| Success Tracking | Basic reports | Real-time analytics |

| API Integration | Limited | Full API access |

| Support Channels | Email only | Priority support |

Quality indexing services also provide detailed analytics showing which submission methods work best for your specific site. They track success rates across different search engines and adjust their strategies accordingly.

Many premium tools integrate with popular SEO platforms, automatically detecting new content and submitting it for indexing without manual intervention.

Time Savings And Efficiency Gains From Using Specialized Indexing Tools

The time savings from using a rapid URL indexer are substantial. Manual submission methods through Google Search Console can take 15-30 minutes per batch, while automated tools handle the same volume in seconds.

This efficiency becomes crucial when launching new product pages, blog posts, or entire website sections that need immediate search visibility. Professional SEO teams often manage dozens of client websites simultaneously.

Automated indexing tools allow them to set up submission workflows that run continuously in the background. This means new content gets indexed consistently without requiring constant manual attention.

The efficiency gains extend beyond just submission time. These tools eliminate the guesswork around whether pages are getting indexed properly. Instead of manually checking search results or using Google Search Console to verify indexing status, automated tools provide clear reporting on successful submissions.

Cost-Effectiveness Compared To Traditional SEO Waiting Periods

When you calculate the opportunity cost of delayed indexing, specialized tools become incredibly cost-effective. Every day your content remains unindexed represents lost potential traffic, leads, and revenue.

For e-commerce sites launching new products or news websites publishing timely content, these delays can cost thousands in missed opportunities. Traditional SEO approaches might save money upfront by avoiding tool costs, but they often result in higher long-term expenses.

Delayed indexing means longer waits for organic traffic to build, which often leads to increased reliance on paid advertising to maintain visibility during indexing delays.

Fast Google indexing through specialized tools also reduces the resources needed for ongoing SEO maintenance. Teams spend less time monitoring indexing status and troubleshooting crawl issues, allowing them to focus on content creation and strategic optimization work that drives better results.

Implementing Fast Indexing Strategies For Maximum Search Visibility

Best Practices For Submitting URLs To Indexing Services

When submitting URLs to indexing services, timing and frequency matter more than most people realize. Google Search Console remains the gold standard for URL submission, but don’t limit yourself to just one platform.

Submit your most important pages within 24 hours of publication, and batch submit less critical content weekly to avoid overwhelming crawlers. Quality beats quantity every time. Rather than submitting every single page on your site, focus on your money pages, fresh content, and updated articles.

Create a priority system where homepage updates, cornerstone content, and revenue-generating pages get immediate submission through your rapid URL indexer tool.

Structure your submissions strategically. Group related URLs together and submit them in logical sequences. If you’re launching a new product category, submit the main category page first, then individual product pages 24-48 hours later. This helps search engines understand your site architecture and improve page indexing efficiency.

Optimizing Your Content Structure For Rapid Crawler Recognition

Clean, semantic HTML structure acts like a roadmap for search engine crawlers. Use proper header tags (H1, H2, H3) in hierarchical order, and make sure your main keyword appears in your H1 tag. Crawlers love predictable patterns, so maintain consistent URL structures across your site.

Internal linking creates pathways for crawlers to discover new content faster. When you publish new content, immediately add internal links from high-authority pages on your site. This signal tells crawlers that your new content is valuable and worth indexing quickly.

Page loading speed directly impacts crawler efficiency. Compress images, minify CSS and JavaScript, and use a content delivery network (CDN) to ensure crawlers can access your content without delays. A slow-loading page often gets abandoned by crawlers before they finish indexing it.

Create XML sitemaps that update automatically when you add new content. Submit these sitemaps through Google Search Console and other webmaster tools to give crawlers a complete overview of your site structure.

Strategic Timing For Indexing New and Updated Content

Publishing timing can significantly impact how quickly your content gets indexed. Search engines tend to crawl more actively during business hours in major time zones. Publishing between 9 AM and 5 PM EST or PST often results in faster discovery times.

Fresh content gets priority treatment from search engines. When updating existing pages, make substantial changes rather than minor tweaks. Add new sections, update statistics, or include recent examples to signal that your content deserves re-crawling.

Seasonal content requires advance planning for SEO indexing strategies. Submit holiday-related content 4-6 weeks before peak season to ensure proper indexing. Breaking news or trending topics should be submitted immediately after publication using your SEO indexing tool.

Batch similar content submissions to create topical authority signals. If you’re launching a series of related articles, submit them over consecutive days rather than all at once. This approach helps search engines understand your expertise in specific topic areas.

Monitoring And Measuring Indexing Success Rates

Track your indexing performance using Google Search Console’s Coverage report. Check this weekly to identify pages that aren’t getting indexed and understand why. Look for patterns in indexing failures – they often reveal technical issues or content quality problems.

Set up automated alerts for indexing status changes. Tools like Ahrefs or SEMrush can notify you when pages drop from or get added to search indexes. This real-time monitoring helps you respond quickly to indexing issues.

Create a tracking spreadsheet that records submission dates, indexing dates, and the time gap between them. This data reveals which types of content get indexed fastest and helps optimize your rapid URL indexer strategy.

Monitor your organic traffic patterns to understand indexing effectiveness. New pages should start showing impressions in Search Console within 1-3 days if indexing is working properly. Longer delays suggest technical or content issues.

Troubleshooting Common Indexing Delays and Obstacles

Duplicate content is the most common indexing killer. Use canonical tags properly and avoid publishing substantially similar content across multiple URLs. Search engines often refuse to index pages they perceive as duplicates.

Technical errors like 5XX server errors, broken redirects, or crawl budget issues can prevent indexing. Regularly audit your site for these problems using tools like Screaming Frog or Google Search Console’s Crawl Stats report.

Low-quality content signals can delay indexing indefinitely. Pages with thin content, excessive ads, or poor user experience metrics often get deprioritized. Focus on creating comprehensive, valuable content that serves genuine user needs.

Mobile-first indexing means your mobile version must be crawler-friendly. Ensure your mobile site has the same content as your desktop version and loads quickly on mobile devices. Google primarily uses the mobile version for indexing and ranking decisions.

Robots.txt misconfiguration can accidentally block important pages from crawlers. Regularly review your robots.txt file and test it using Google Search Console’s robots.txt Tester tool. Even small mistakes can prevent entire sections of your site from getting indexed.

Advanced Techniques For Sustained Indexing Performance

Building Internal Linking Structures That Support Faster Indexing

Strong internal linking acts as a roadway system for search engine crawlers, guiding them efficiently through your website’s content. When you create strategic connections between related pages, you’re essentially building a spider web that helps search engines discover and index new content faster.

The key lies in creating hub pages that link to multiple related articles while maintaining a logical hierarchy. Your main category pages should connect to subcategory pages, which then link to individual posts or product pages. This cascading structure ensures that SEO URL indexing happens more systematically.

Fresh content benefits most from strategic internal links. When you publish new articles, immediately link to them from existing high-authority pages on your site. This practice signals to search engines that the new content is valuable and deserves crawling attention. Use contextual anchor text that includes relevant keywords naturally within the content flow.

Consider implementing breadcrumb navigation and related post sections to maximize crawl paths. These elements create additional linking opportunities that support rapid URL indexer goals by providing multiple entry points for search engine bots.

Leveraging XML Sitemaps and Robots.txt for Improved Crawler Efficiency

XML sitemaps serve as detailed maps for search engines, listing all important URLs on your website along with metadata about each page’s priority, update frequency, and last modification date. Modern SEO indexing tools rely heavily on well-structured sitemaps to understand website architecture quickly.

Create separate sitemaps for different content types – one for blog posts, another for product pages, and additional ones for images or videos. This segmentation helps search engines prioritize crawling based on content importance and update frequency.

Submit these sitemaps directly to Google Search Console and Bing Webmaster Tools for faster recognition. Your robots.txt file works alongside sitemaps to direct crawler behavior. Use it to prevent indexing of duplicate content, admin pages, or low-value sections while clearly pointing to your sitemap locations.

Here’s what an optimized robots.txt structure looks like:

| Directive | Purpose | Example |

|---|---|---|

| Allow | Permit crawler access | Allow: /blog/ |

| Disallow | Block specific paths | Disallow: /admin/ |

| Sitemap | Reference sitemap location | Sitemap: https://yoursite.com/sitemap.xml |

Regular sitemap updates are crucial for maintaining search engine indexing efficiency. Whenever you publish new content, ensure your sitemap reflects these changes within hours, not days.

Creating Content Update Schedules That Maintain Consistent Visibility

Search engines favor websites that demonstrate consistent activity and fresh content creation. Developing a systematic approach to content updates helps maintain steady crawl rates and supports fast Google indexing of new materials.

Establish content refresh cycles based on your industry’s pace and audience expectations. News websites might update hourly, while B2B service providers could focus on weekly or bi-weekly publishing schedules.

The key is consistency rather than frequency – search engines adapt their crawling patterns to match your publishing rhythm. Implement content audit processes that identify pages needing updates, refreshes, or improvements.

Old content with updated information, new statistics, or expanded sections often gets re-indexed quickly because it represents genuine value additions rather than completely new pages competing for attention.

Track your content performance using analytics to identify which types of updates generate the best indexing results. Some pages benefit from minor tweaks and additions, while others require complete rewrites to maintain search visibility.

This data-driven approach to SEO indexing strategies ensures your efforts focus on activities that produce measurable improvements in search engine recognition and ranking performance.

Time your major content releases during peak crawler activity periods, typically weekdays during business hours in your target market’s time zone.

Getting your pages indexed quickly can make or break your SEO strategy. When search engines discover and catalog your content faster, you gain a competitive edge that translates into better rankings and increased organic traffic.

The combination of understanding crawling processes, using rapid indexing tools, and implementing smart strategies creates a powerful approach to search visibility. Don’t let your great content sit invisible while competitors grab the spotlight.

Start by submitting your URLs to Google Search Console, then explore rapid indexing tools that can speed up the discovery process. Focus on creating high-quality content with proper technical optimization, and watch as your pages climb the search results faster than ever before.